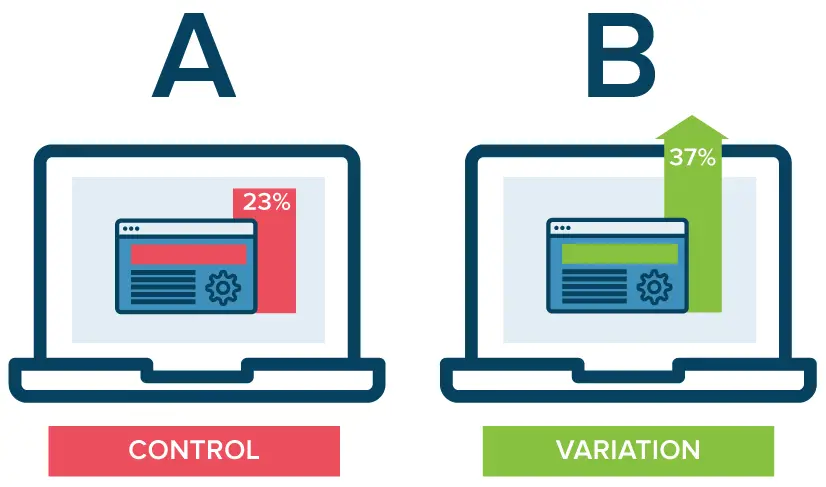

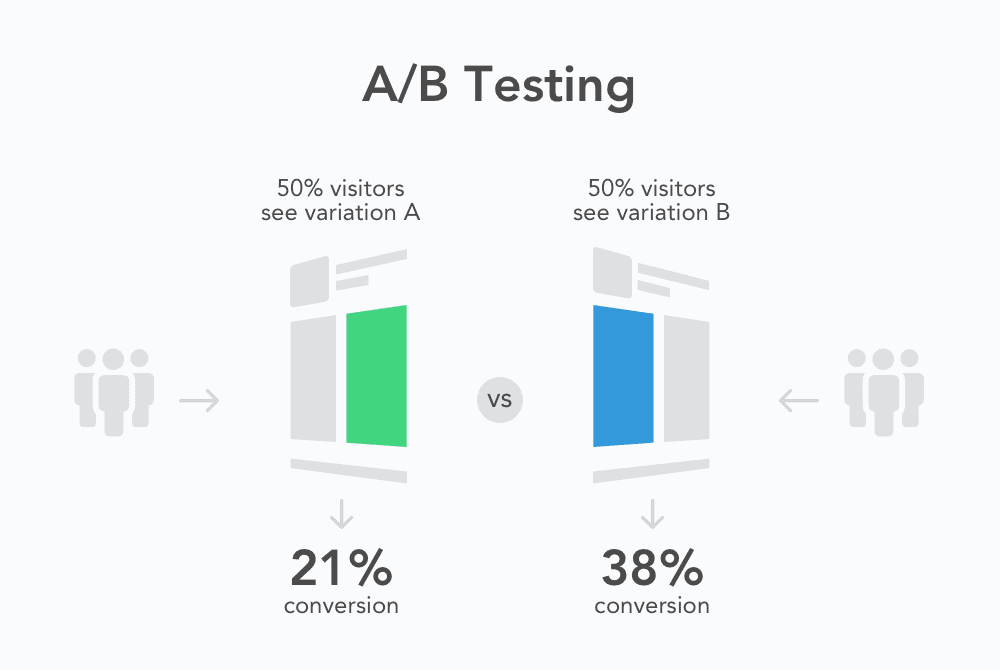

In 2025, A/B testing remains the cornerstone of conversion rate optimisation, enabling teams to run controlled experiments and make data-driven decisions that materially improve outcomes. A rigorous A/B testing program combines the scientific method with modern tooling to compare a control version and variant versions across user segments, identifying winning experiences and optimising digital assets. To operationalize this, build an Experimentation Culture that codifies hypothesis-driven tests, clear conversion goals, and statistical hypothesis testing procedures; for example, run A/B/n tests together with multivariate testing when testing design variations across high-traffic pages. Consider consulting a CRO agency or using enterprise testing toolsets to scale; for practical resources on advanced implementation, explore comprehensive A/B testing strategies resource which outlines frameworks for split testing, feature flagging, and two-sample hypothesis testing. Action steps: define key metrics, segment traffic, set sample size for statistical significance, and iterate rapidly.

Visual roadmap showing experimentation program stages from hypothesis to production lessons learned

Decoding the Power of A/B Testing: An Essential Introduction to Conversion Rate Optimization

A clear introduction to A/B Testing frames it as a randomized experiment that isolates causal impact of design variations on conversion rate and user experience. Begin by articulating a strategic plan that ties tests to business objectives, selecting conversion goals, and mapping customer journeys so every A/B testing decision is purposeful. Use data from analytics and user-experience research to craft hypotheses and prioritize tests by expected return on investment; incorporate features like promotional codes and personalization to measure lift. For organizations needing implementation support, review enterprise service providers such as enterprise experimentation and data consultancy for technical integrations, campaign management, and statistical analysis pipelines. Practical tip: combine split testing with multivariate tests where interactions matter, and use bucket testing for large-scale webpage comparisons. Document each randomized controlled experiment, ensure tracking for key metrics, and maintain a test registry.

Diagram of hypothesis-to-experiment workflow with metric tracking and priority scoring

Strategic A/B Testing Plan: Unveiling Core Concepts for Company Success

A strategic A/B testing plan for [Company] should prioritize tests via impact-effort matrices and incorporate multivariate testing selectively for complex pages. Start with two-sample hypothesis testing to validate small, high-value changes and ramp to sophisticated experiments when traffic allows, leveraging multivariate tests and A/B/n tests for broader insights. Integrate customer data and behavioral insights to build personalization rules and target testing segments like mobile app users versus desktop visitors. Emphasize conversion rate optimisation best practices: maintain a control version baseline, run visually distinct variant versions, and instrument experiments for statistical significance. For technical partners and rapid mobile experimentation, consider mobile-focused feature flag vendors and testing partners such as mobile app A/B testing optimisation partner to ensure accurate event tracking and robust statistical analysis. Finalize a roll-out plan, criteria for winner selection, and an iterative roadmap for continuous testing.

Flowchart showing priority scoring, segmentation, and multivariate test decision criteria

Critical Factors Driving Remarkable CRO Results in 2025

Remarkable CRO results stem from disciplined test design, quality data, and rapid learning loops. Key factors include clean customer data, rigorous statistical analysis, and cross-functional ownership to reduce friction between product, design, and analytics. Implement a testing tech stack that supports visual editor workflows, Google Analytics integration alternatives, and enterprise testing tool capabilities; monitor statistical significance and maintain pre-registration of hypotheses to prevent p-hacking. Optimize for conversion rate and user engagement with micro-experiments on feature exposures, pricing & split testing, and promotional codes experiments. Example: a retail site increased growth and conversions by 8% after prioritized A/B testing of checkout variations and coupon flows. Encourage continuous testing and document learnings in an internal playbook, and align experiments with business objectives for demonstrable return on investment.

Infographic showing four pillars: data, design variations, stats, and organizational processes

Navigating the Current State of A/B Testing Tools: What to Expect from Leading Platforms like Google Optimize

With Google Optimize sunset and evolving enterprise tools, teams must evaluate alternatives for visual editing, multinomial testing, and robust statistical analysis. Choose platforms that enable A/B testing, A/B/n tests, and multivariate tests with server-side capabilities for feature experiments and controlled experiment rollout. Assess integrations: ensure seamless Google Analytics integration or compatible analytics, support for mobile app users, and capabilities for split testing across webpages, app screens, and email campaigns. When comparing options, prioritize testing segment targeting, Experimentation Culture features, and clarity on statistical hypothesis testing methodologies. Vendors vary in pricing and feature sets; enterprise testing tool suites often include experimentation programs, rigorous two-sample hypothesis testing, and advanced statistical analysis. Practical evaluation includes running pilot experiments to validate tracking, checking visual editor usability for product teams, and measuring how tools handle randomized experiment allocation at scale.

Comparative table illustration of testing platforms, features, and integration capabilities

Unlocking Actionable Insights: A Proven Track Record with Our Experimentation Program

A mature experimentation program translates A/B testing data into actionable customer insights through rigorous test analysis and knowledge transfer. Start each experiment with a clear hypothesis, expected effect size, and pre-defined statistical analysis plan to ensure reproducibility. Use behavioral insights and customer data to segment visitors, apply personalization, and run targeted multivariate tests when interactions are hypothesized. Case study: a subscription service used sequential A/B testing to optimise pricing and trial experiences, increasing recurring conversions by double digits within six months. For organizations seeking independent financial or strategic validation, advisory resources such as specialized financial optimisation advisory services guide can help model revenue impacts and return on investment. Operationalize learnings through experiment playbooks, maintain a results repository, and train teams on statistical significance interpretation to avoid false positives.

Chart depicting funnel conversion improvements and documented hypothesis outcomes over time

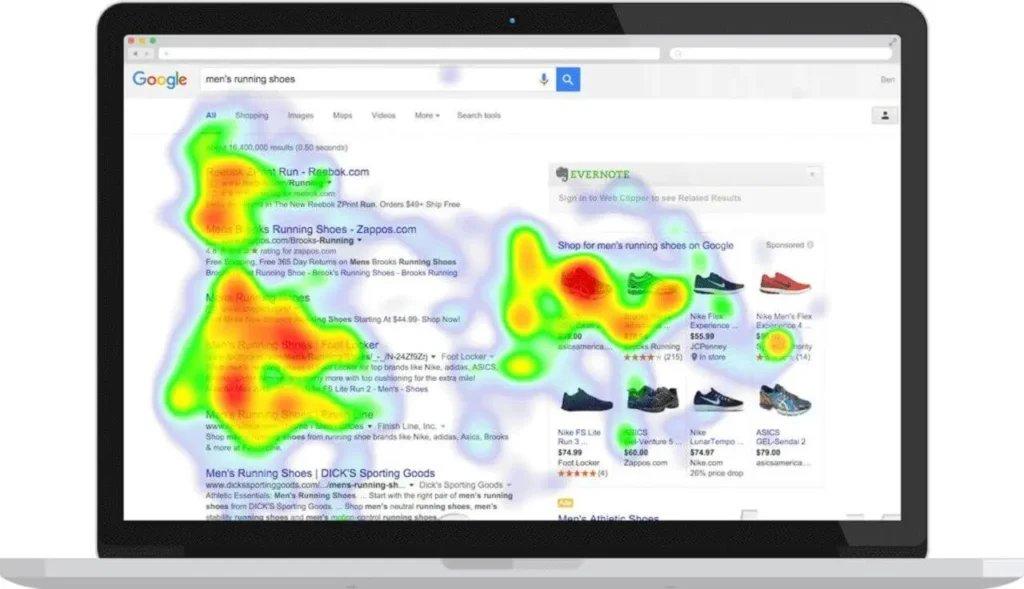

Why A/B Testing Matters: Transforming Visitor Pain Points into Continuous Optimization

A/B testing systematically reduces visitor friction by testing concrete remedies to identified pain points; this closes gaps between user experience problems and business metrics. Use user-experience research to surface issues such as slow checkout flows or unclear CTAs, then convert those into A/B tests with measurable conversion goals. Combine qualitative feedback with quantitative data to prioritize design variations, run bucket testing for major layout changes, and use multivariate tests to detect interaction effects. A cultural emphasis on continuous testing and documentation ensures that successful experiments scale across product areas and inform feature roadmaps. Practical step: create a test backlog tied to business KPIs, estimate impact, and schedule experiments to balance exploration with exploitation of winning experiences.

Illustration of customer journey improvements after iterative testing and prioritized backlog management

The Definitive A/B Testing Guide: Proven Strategies for Sustained Growth & Optimal CRO Results in 2025

To sustain growth, embed A/B testing into the product lifecycle so that every new feature is a testing opportunity rather than a release risk. Adopt an experimentation framework that mandates hypothesis statements, target metrics, and decision thresholds based on statistical analysis. Embrace multivariate testing for pages with multiple independent elements and A/B/n tests when wanting to compare many variants efficiently. Leverage customer data to drive personalization experiments and employ split testing to compare webpage designs across visitor behavior cohorts. Ensure close monitoring of technical implementation to prevent instrumentation errors and false signals; an experimentation playbook reduces regression and supports continuous testing velocity. For operational advice on campaign orchestration and testing at scale, review trusted partner resources like enterprise digital experience optimization case studies that showcase end-to-end program delivery and measurable conversion optimization.

Process diagram linking experiments to feature rollouts and scaled optimisations

Conquering First-Party Data: Understanding A/B Testing Definition and Its Impact on Conversion Rates

First-party data empowers precise targeting and reliable measurement for experimentation programs, reducing dependence on third-party cookies and enabling personalization that respects privacy. When defining A/B testing initiatives, use first-party event tracking to segment tests, measure conversion lifts, and construct customer cohorts for personalization strategies. Implement data governance and instrumentation best practices to ensure clean customer data for statistical hypothesis testing, accurate key metrics, and reproducible results. First-party data also enables sophisticated experiments like conditional personalization and targeted multivariate tests for high-value users. Operational tip: centralize event schemas, validate tracking with reconciliation tests, and maintain a data-driven approach to avoid misattribution. These practices improve conversion rate and customer experience while facilitating optimized digital marketing and campaign management.

Diagram of first-party data flow from events through analytics to experimentation outcomes

Unlocking Actionable Insights: A Proven Track Record with Our Experimentation Program

Hypothesis-driven test creation anchors experiments in measurable business impact by linking user behavior observations to testable changes. Craft hypotheses that specify target segment, proposed design variations, expected direction of effect, and quantifiable key metrics such as conversion rate or average order value. Use two-sample hypothesis testing methods for primary comparisons, and expand to multivariate tests when exploring interactions between independent elements. Prioritize tests using expected return on investment and confidence intervals; this prevents resource drift. Train teams on statistical analysis and result interpretation to maintain experiment reliability and avoid drawing premature conclusions. For deeper operational guidance and to fast-track implementation, consult specialist partners on tech stack integration and experimentation ops.

Template graphic showing hypothesis components and prioritization matrix for test selection

Why A/B Testing Matters: Transforming Visitor Pain Points into Continuous Optimization

Understanding common test statistics is essential for interpreting A/B testing outcomes: know how p-values, confidence intervals, and statistical power relate to business decisions. Calculate sample sizes ahead of time based on expected effect sizes and desired power to avoid underpowered experiments. Use sequential testing cautiously and document stopping rules to preserve statistical validity. For multivariate tests and A/B/n comparisons, adjust for multiple comparisons to control false discovery rates and use robust statistical analysis to interpret complex interactions. Apply statistical hypothesis testing principles to assess whether observed differences likely reflect real customer insights or noise. Equip analysts with automated analysis pipelines and dashboards to expedite decision-making and standardize reporting.

Statistical visualization showing p-values, confidence intervals, and sample size relationships

Mastering Advanced Experimentation Types: A/B/n Testing and Multivariate Testing for Superior CRO Results

Advanced experimentation types like A/B/n testing and multivariate testing unlock deeper behavioral insights when used appropriately. A/B/n tests are ideal when comparing several distinct variant versions simultaneously, while multivariate tests measure the interaction effects of multiple element changes across a webpage. Choose multivariate tests only when traffic supports the combinatorial sample size needs, and prefer A/B/n when quick comparisons among a few strategic variations suffice. Where appropriate, use randomized controlled experiment designs to maintain internal validity and control for visitor behavior heterogeneity. Complement these with personalization experiments driven by customer data to improve relevance and conversion outcomes. To balance speed and rigor, sequence simple A/B tests to validate major hypotheses before investing in complex multivariate designs.

Diagram contrasting A/B/n test flows with multivariate test matrices and traffic requirements

Implementation Guide: Step-by-Step A/B Testing for Integration

A step-by-step implementation guide simplifies integration by outlining setup, instrumentation, and analysis checkpoints. Start with traffic and metric audits, implement event tracking to capture conversion behaviors, and set up a testing environment with feature flags to control rollouts. Run smoke tests to validate variant allocation and analytics events, then launch experiments with predefined sample sizes and segmentation. Monitor real-time metrics for system issues, enforce statistical analysis plans, and automate routine reporting to speed insights. For teams needing managed support and to accelerate implementation velocity, consider partnerships with specialists who provide on-the-ground assistance for tech stack integration and test orchestration.

Stepwise checklist visual from planning to roll-out and analysis showing integration milestones

The Definitive A/B Testing Guide: Proven Strategies for Sustained Growth & Optimal CRO Results in 2025

Boosting testing velocity requires organizational alignment, tooling that reduces setup friction, and dedicated experimentation ops to handle increased test throughput. Establish an Experimentation Culture with clear roles—experiment owner, analyst, product and design leads—and implement templates for hypothesis creation, QA, and statistical analysis. Use visual editor tools and automated sample size calculators to reduce time-to-launch, and employ feature flags for rapid rollouts and safe rollbacks. Engage CRO experts to guide prioritization, mentor teams on statistical best practices, and troubleshoot instrumentation issues. A focused approach increases the cadence of high-quality A/B testing, reduces technical debt, and yields faster winning experiences. Track program health via metrics like tests per month, win rate, and cumulative conversion lift.

Team workflow illustration showing cross-functional roles and velocity metrics in experimentation program

Conquering First-Party Data: Understanding A/B Testing Definition and Its Impact on Conversion Rates

Case studies show that disciplined A/B testing delivers sustained growth: example retailers that aligned experimentation with personalization saw conversion rate uplift of 7–12% within a year. Future trends include stronger first-party data use, server-side experimentation, and AI-powered variant generation to accelerate test ideation. Companies should adopt multivariate testing selectively, invest in statistical analysis automation, and build Experimentation Culture to scale. Track evolving topics like alternatives to Google Optimize, enterprise testing tool innovation, and integration with broader digital marketing stacks. For local operational examples and creative testing inspiration, explore community and vendor showcases such as regional success stories from partners that combine technical delivery with user-focused design.

Collage of case study highlights showing conversion lift charts and trend projections

Rigorous Test Analysis: Leveraging A/B Test Results for Actionable Insights

Rigorous test analysis converts raw A/B testing outcomes into strategic recommendations by focusing on effect sizes, confidence intervals, and business impact modeling. Analysts should quantify conversion optimization gains in revenue terms and simulate return on investment across scenarios. Apply segmentation to uncover differential impacts by user cohorts and use behavioral insights to craft follow-up experiments. Document negative results as informative outcomes to avoid repeating futile variations and to refine hypotheses. Disseminate learnings through runbooks and central repositories so product teams can leverage winning experiences across channels. A mature program invests in statistical quality control, reproducible analysis scripts, and cross-team workshops to translate results into product roadmaps and optimized digital experiences.

Dashboard mockup showing segmented results, confidence intervals, and revenue impact projections

Lessons Learned from Opticon 2025: Elevating Your Experimentation Program

Opticon 2025 emphasized practical lessons: prioritize clean instrumentation, adopt sequential analysis safeguards, and invest in experimentation ops to sustain high test volumes. Key takeaways include documenting stopping rules, pre-defining primary metrics, and balancing exploratory tests with high-probability impact experiments. Speakers highlighted case studies where coordinated A/B testing across email campaigns and product screens reduced churn and improved trial-to-paid conversions. Implementing these lessons requires a strategic plan, training for A/B testing experts, and a tech stack that supports fast iterations and robust analysis. Operationalizing these insights can materially improve visitor behavior understanding and conversion goals.

Conference highlights visual showing session topics and practical takeaways for experimentation teams

Hypothesis-Driven Test Creation: Building a Robust Experimentation Program

Forecasts for A/B testing tools point to increased automation, AI-assisted variant generation, and tighter integrations with customer data platforms. Expect more sophisticated experiments leveraging first-party data for personalization and dynamic allocation of traffic to maximize conversion rate. Tooling will favor experiment reproducibility, native statistical analysis, and cross-channel testing that spans webpages, app screens, and email campaigns. Organizations should plan for evolving privacy constraints by reinforcing first-party strategies and robust data governance. Continued investment in Experimentation Culture and testing infrastructure will be decisive in maintaining conversion optimization momentum and achieving consistent growth.

Futuristic diagram of integrated experimentation platform with AI and data privacy layers

Decoding Common Test Statistics: A Statistical Approach to A/B Testing

Emerging developments in multivariate testing include smarter traffic allocation algorithms, adaptive experiments that learn variant performance, and hybrid approaches blending A/B/n and multivariate techniques. For most companies, the critical consideration is traffic sufficiency and clear hypothesis definitions so that multivariate tests yield interpretable interaction effects. Use Bayesian approaches for flexible decision-making and combine personalization layers to surface winning combinations for different cohorts. Incorporate user-experience research to reduce test dimensionality and focus on combinations with the highest expected return. Operational readiness includes monitoring statistical analysis, ensuring instrumentation integrity, and maintaining a continuous testing backlog.

Schematic of multivariate test matrix with adaptive allocation and cohort personalization overlays

Empowering Your Company with A/B Testing: Driving Continuous Optimization Towards 2025 Success

Empowerment requires embedding experimentation into decision workflows so product managers, designers, and analysts share responsibility for test outcomes. Create training programs, codify playbooks, and appoint experimentation champions who maintain the test backlog and governance. Align KPIs with business objectives, encourage continuous testing, and prioritize high-impact hypotheses that focus on optimising digital assets, conversion goals, and user engagement. Use consistent reporting to show cumulative conversion rate improvements and operational metrics like tests per quarter. For creative inspiration and local engagement examples, consider community and vendor showcases that highlight iterative successes; smaller partners like regional user engagement and optimization examples can provide unique contextual insights for targeted experiments.

Team training session image showing collaborative test planning and KPI alignment activities

Mastering Advanced Experimentation Types: A/B/n Testing and Multivariate Testing for Superior CRO Results

Summarize lessons into practical checklists: start with clear hypotheses, ensure data quality, select the right experiment type, and pre-define analysis plans to avoid ad-hoc interpretations. Balance speed with statistical rigor by using simple A/B testing for quick validation and multivariate tests for interaction discovery. Centralize results and maintain an insights repository so successful variants inform broader product and marketing decisions. Encourage collaboration across analytics, product, and design to streamline experiment execution and maximize learning velocity. Include examples of success metrics and case studies demonstrating conversion optimization improvements, and set up governance to sustain Experimentation Culture.

One-page summary infographic of key A/B testing rules and prioritized checklist for teams

Implementation Guide: Step-by-Step A/B Testing for Easier Integration

Critical takeaways: prioritize clean instrumentation and first-party data, codify hypothesis and analysis plans, and choose experiment types aligned with traffic and complexity constraints. Maintain an experimentation playbook, invest in training, and use statistical hygiene to interpret results confidently. Emphasize customer data and behavioral insights to design targeted personalization experiments and A/B/n tests that uncover scalable wins. Regularly review program health metrics—win rate, cumulative conversion lift, and testing velocity—and adjust priorities accordingly. A disciplined approach turns A/B testing into a repeatable growth engine that supports sustained conversion rate optimisation.

Graphic highlighting three pillars: data integrity, hypothesis rigor, and iterative scaling of experiments

If you found this article helpful, contact us for a FREE CRO Audit