In the competitive world of Sydney’s ecommerce landscape, making data-driven decisions is essential to stay ahead and maximize conversions. A/B testing has become a powerful tool for online retailers looking to optimize their websites, but understanding the statistical concepts behind these tests—such as significance, power, and Minimum Detectable Effect (MDE)—is key to unlocking their true potential. Without a solid grasp of these elements, store owners risk misinterpreting results, missing opportunities, or making costly mistakes. In this comprehensive guide, we’ll demystify the core statistical principles of A/B testing specifically tailored for Sydney ecommerce stores, empowering you to conduct more effective experiments and drive meaningful growth.

1. Understanding A/B Testing and Its Importance for Sydney Ecommerce

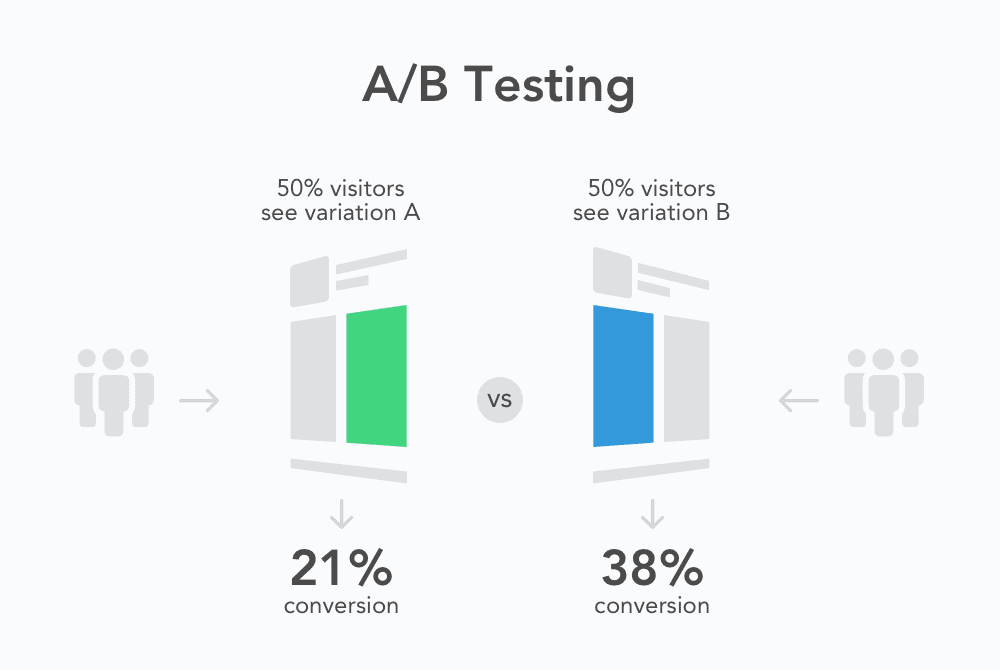

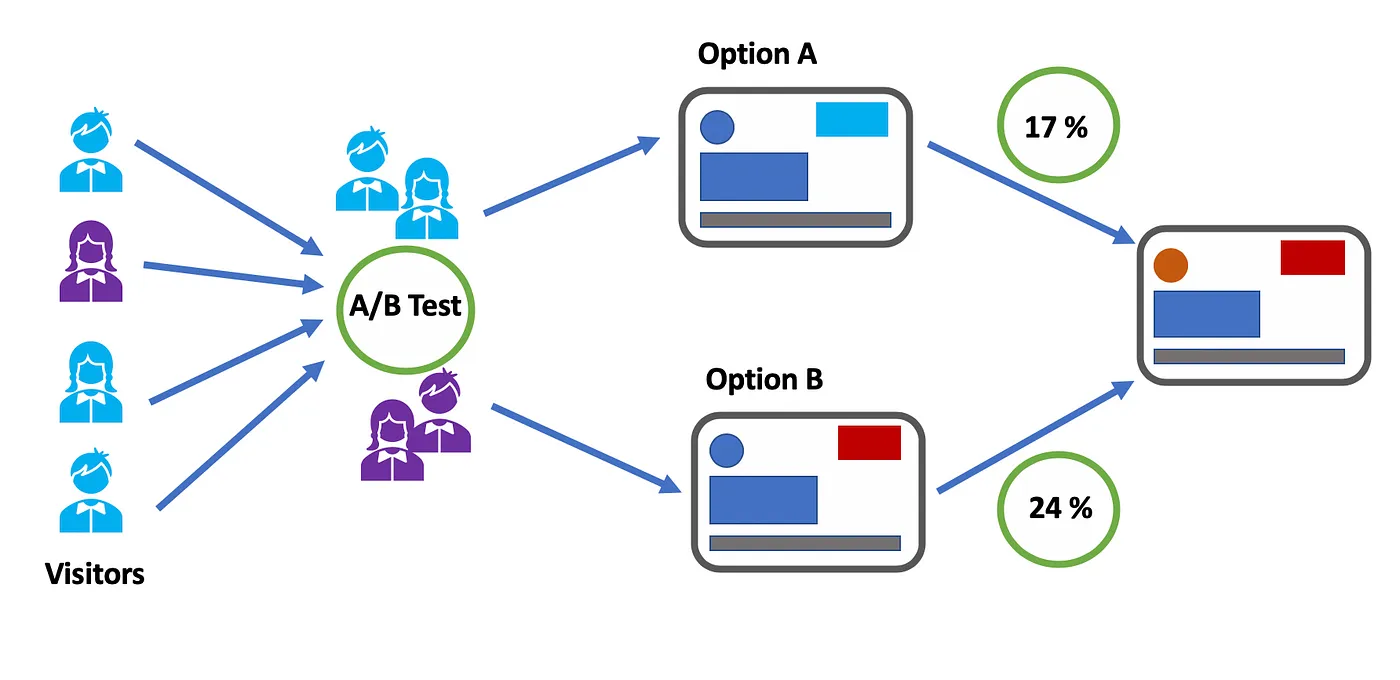

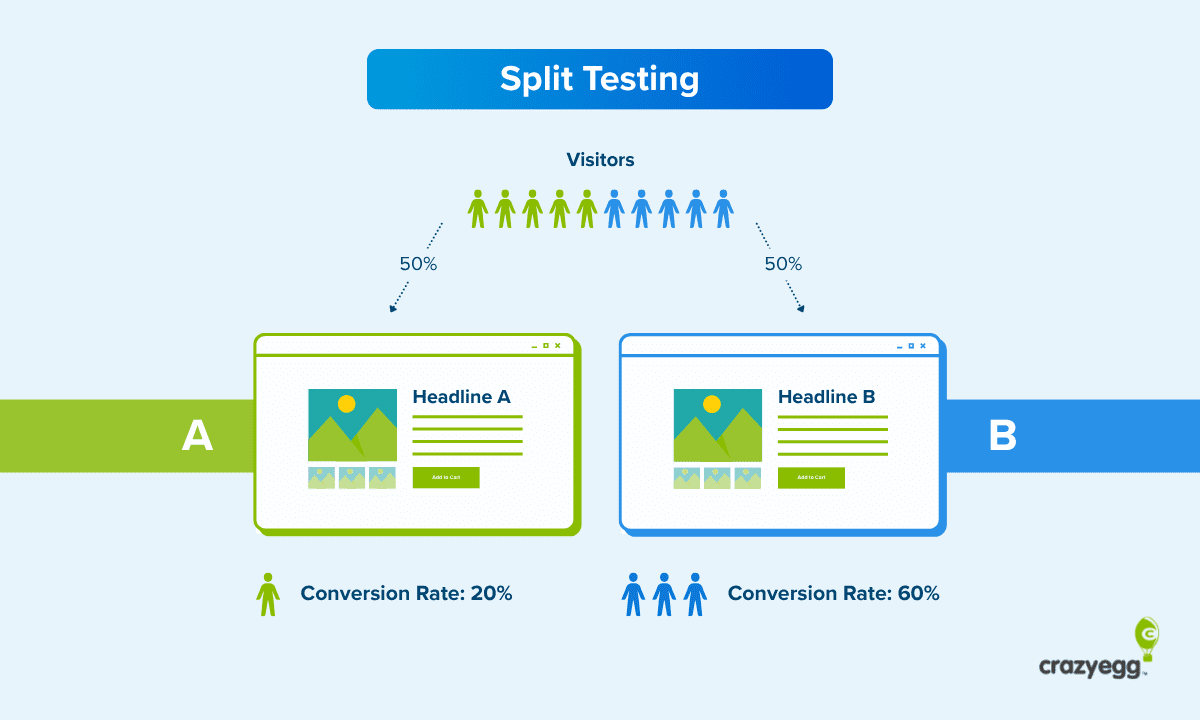

A/B testing is a powerful method that allows Sydney ecommerce stores to make data-driven decisions by comparing two versions of a webpage, app feature, or marketing element to determine which performs better. By randomly splitting your website visitors into two groups—Group A experiencing the original version and Group B encountering the variant—you can measure differences in key metrics such as click-through rates, conversions, or average order value. This approach eliminates guesswork and helps businesses identify what truly resonates with their customers. For Sydney-based ecommerce stores operating in a competitive market, mastering A/B testing is crucial to optimizing user experience, increasing sales, and staying ahead of the curve. By systematically testing and refining elements like product pages, promotional banners, and checkout flows, you can uncover valuable insights that drive meaningful improvements and maximize your store’s overall performance.

2. The Role of Statistical Significance in A/B Testing

Statistical significance plays a crucial role in A/B testing, especially for Sydney ecommerce stores looking to make data-driven decisions that enhance user experience and boost conversions. At its core, statistical significance helps determine whether the differences observed between two variants—such as a new website design versus the current layout—are likely due to the changes implemented or simply a result of random variation. By setting a significance level, commonly at 0.05 or 5%, store owners can control the risk of making false-positive conclusions, ensuring that any declared improvements are genuinely impactful. Without establishing statistical significance, businesses run the risk of acting on misleading results, potentially investing in changes that don’t actually improve performance. Therefore, understanding and correctly applying statistical significance empowers Sydney ecommerce stores to confidently optimize their websites and marketing strategies, driving sustainable growth in a competitive market.

3. Power Analysis: Ensuring Reliable Test Results

Power analysis is a crucial step in designing effective A/B tests, especially for Sydney ecommerce stores aiming to make data-driven decisions with confidence. At its core, power analysis helps determine the minimum sample size needed to detect a meaningful difference between your test variants, ensuring your results are both reliable and actionable. Without adequate power, your test risks yielding inconclusive or misleading outcomes, potentially leading to wasted resources or missed opportunities.

In the context of ecommerce, power is the probability that your test will correctly identify a true effect — such as an increase in conversion rates or average order value — when it actually exists. Typically, a power level of 80% or higher is recommended, meaning there’s an 80% chance your test will detect a real difference if one truly exists. To achieve this, power analysis takes into account multiple factors: the expected effect size (also known as the Minimum Detectable Effect or MDE), the level of statistical significance you require, and the baseline performance metrics of your store.

For Sydney ecommerce businesses, conducting a thorough power analysis ensures that your A/B tests are neither underpowered nor unnecessarily large, striking the perfect balance between speed and accuracy. By carefully planning your sample size upfront, you can avoid common pitfalls like false negatives and confidently implement changes that drive growth and enhance customer experience. Ultimately, mastering power analysis empowers your team to make smarter, data-backed decisions that propel your ecommerce store to success in a competitive market.

4. Defining and Calculating Minimum Detectable Effect (MDE)

Minimum Detectable Effect (MDE) is a crucial concept in A/B testing, especially for Sydney ecommerce stores aiming to make data-driven decisions with confidence. Simply put, MDE represents the smallest meaningful difference between your control and variant groups that your test is designed to detect. Understanding and calculating MDE allows you to set realistic expectations for your experiment’s outcome and ensures that your test has enough sensitivity to identify significant changes in key metrics like conversion rates or average order value.

To calculate MDE, you need to consider several factors: the baseline conversion rate (or current performance metric), your desired statistical significance level (commonly 95%), the statistical power (commonly 80%), and the sample size available for testing. The formula involves balancing these parameters to find the smallest effect size that your experiment can reliably detect. For instance, a higher baseline conversion rate or smaller sample size will generally increase the MDE, meaning only larger differences can be confidently identified. Conversely, increasing your sample size or accepting a slightly lower power can help detect smaller effect sizes.

For Sydney ecommerce businesses, accurately defining MDE ensures that A/B tests are neither underpowered—risking false negatives—nor excessively large, which can waste time and resources. Tools like online MDE calculators or statistical software can simplify this process, enabling marketers to design robust experiments that provide actionable insights, ultimately driving better optimization and growth.

5. Practical Tips for Designing Effective A/B Tests

Designing effective A/B tests is crucial for Sydney ecommerce stores aiming to make data-driven decisions that truly impact their business outcomes. To start, it’s important to clearly define your hypothesis and identify the key metric you want to improve—whether that’s conversion rate, average order value, or click-through rate. This focus ensures your test remains targeted and actionable. Next, determine an appropriate sample size by considering your test’s statistical power and minimum detectable effect (MDE). Underpowered tests can lead to inconclusive results, while overly large samples may waste resources and time. Additionally, maintain consistency in test conditions by running your variants simultaneously to avoid external factors like seasonality or promotions skewing your data. Don’t forget to segment your audience thoughtfully; testing on relevant customer groups can reveal insights that apply specifically to your Sydney market demographics. Lastly, keep the duration of your test long enough to capture typical user behavior patterns—usually at least one full business cycle—but avoid unnecessarily extended tests that delay decision-making. By following these practical guidelines, you can design A/B tests that provide reliable, actionable insights to optimize your ecommerce store’s performance.

6. Interpreting Results and Making Data-Driven Decisions

Interpreting the results of your A/B tests accurately is crucial for making informed, data-driven decisions that can significantly impact your Sydney ecommerce store’s performance. Once your test reaches statistical significance, it means there’s a high likelihood that the observed difference between your control and variant isn’t due to random chance. However, it’s equally important to consider the test’s power—the probability of correctly detecting a true effect—to avoid false negatives and ensure your conclusions are reliable.

When reviewing your results, pay close attention to key metrics such as conversion rates, average order value, or click-through rates, depending on your test goals. Compare these metrics alongside your Minimum Detectable Effect (MDE) to understand whether the changes you observe are not only statistically significant but also practically meaningful for your business.

Remember, data-driven decisions go beyond just picking the “winning” variant. Use your test insights to identify patterns, customer preferences, and potential areas for further optimization. By combining statistical rigor with strategic analysis, Sydney ecommerce stores can confidently implement changes that boost user experience, increase revenue, and stay ahead in a competitive market.

7. Avoiding Common Statistical Pitfalls in A/B Testing

When conducting A/B testing for your Sydney ecommerce store, it’s crucial to be aware of common statistical pitfalls that can lead to misleading conclusions and costly mistakes. One frequent error is running tests that are underpowered—meaning the sample size is too small to reliably detect a meaningful difference. This often results in inconclusive results or false negatives, causing you to miss out on valuable insights. Another common mistake is peeking at the data too early and stopping the test prematurely once a desired outcome appears. This practice inflates the risk of false positives, making you believe an effect exists when it actually doesn’t. Additionally, neglecting to define your Minimum Detectable Effect (MDE) beforehand can lead to setting unrealistic expectations or interpreting minor fluctuations as significant changes. To avoid these pitfalls, always calculate the appropriate sample size based on your desired significance level, statistical power, and MDE before launching your test. Maintain a strict testing timeline without interim checks, and ensure proper randomization to eliminate bias. By steering clear of these common errors, you’ll make more confident, data-driven decisions that genuinely improve your ecommerce performance.

8. Balancing Business Impact and Statistical Rigor

When conducting A/B testing for your Sydney ecommerce store, striking the right balance between business impact and statistical rigor is crucial to making informed decisions that truly benefit your bottom line. On one hand, focusing solely on statistical significance without considering the practical relevance of the results can lead to decisions that, while mathematically sound, may yield negligible improvements in user experience or revenue. On the other hand, prioritizing business impact without adhering to proper statistical principles risks drawing premature conclusions from data noise, resulting in costly missteps.

To achieve this balance, it’s important to carefully define your Minimum Detectable Effect (MDE) — the smallest change in key metrics that would justify implementing a new strategy. Setting an MDE that aligns with your business goals ensures that your tests are designed to detect meaningful improvements rather than trivial fluctuations. Additionally, ensuring adequate statistical power (typically 80% or higher) helps minimize the chances of missing true effects, while setting appropriate significance levels guards against false positives.

In practice, this means planning your experiments with a clear understanding of both your business objectives and the statistical requirements needed to validate those goals. By doing so, Sydney ecommerce stores can confidently interpret A/B test results, implement changes that drive real growth, and avoid the pitfalls of either overanalyzing inconsequential data or rushing into decisions without sufficient evidence. Ultimately, balancing business impact with statistical rigor empowers you to make data-driven choices that maximize both customer satisfaction and revenue.

If you found this article helpful and need help with your website conversion, contact us for a FREE CRO Audit