In the fast-paced world of ecommerce, making informed decisions backed by data is crucial for staying competitive—especially for Sydney-based online stores navigating unique market dynamics. One key aspect often overlooked is optimizing Minimum Detectable Effect (MDE) sizing and test duration in A/B testing to drive meaningful insights without wasting valuable resources. Getting these parameters right ensures that your experiments are both efficient and statistically sound, allowing you to confidently implement changes that boost conversions and revenue. In this comprehensive guide, we’ll explore data-driven strategies tailored for Sydney ecommerce businesses to help you fine-tune your MDE sizing and test durations, maximizing the impact of your optimization efforts and accelerating your store’s growth.

1. Understanding MDE and Its Importance in Ecommerce Testing

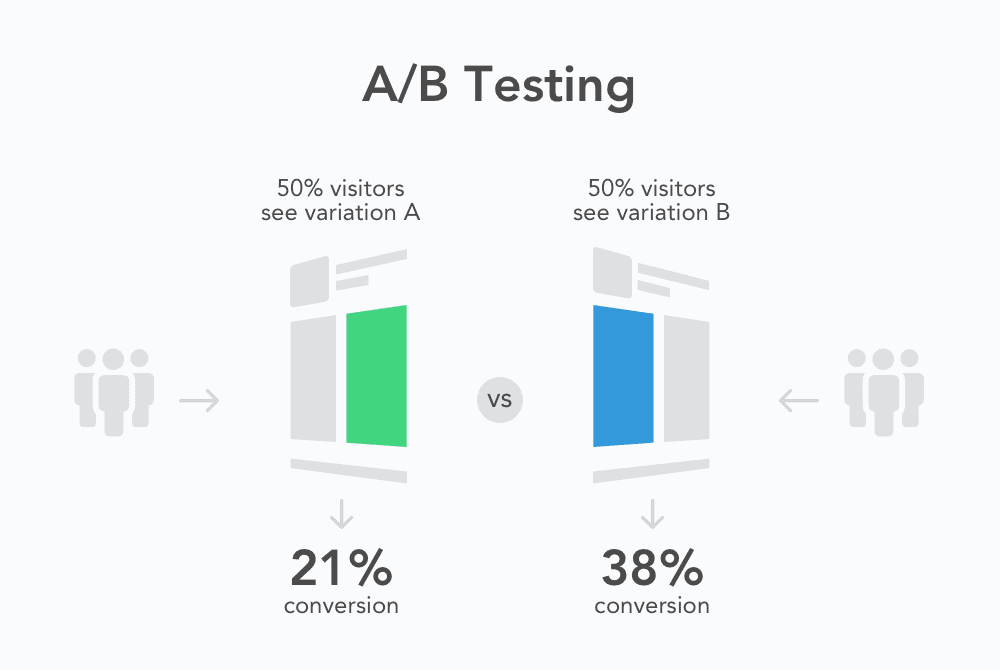

When it comes to optimizing your ecommerce store’s performance, understanding the concept of Minimum Detectable Effect (MDE) is crucial. MDE refers to the smallest change in a key metric—such as conversion rate or average order value—that your test is designed to reliably detect. In other words, it represents the minimum improvement your experiment must achieve for you to confidently conclude that the change is meaningful and not just due to random chance. For Sydney ecommerce stores, where competition is fierce and customer behavior can be nuanced, setting an appropriate MDE ensures that your A/B tests and other optimization experiments are both practical and insightful. If your MDE is set too low, you may need excessively large sample sizes and long test durations, which can delay decision-making and increase costs. Conversely, setting the MDE too high might cause you to miss valuable, incremental improvements that can add up over time. By understanding and carefully selecting an MDE aligned with your business goals and traffic patterns, you can design more effective tests that drive meaningful growth in your ecommerce store.

2. Factors Influencing MDE Sizing for Sydney Ecommerce Stores

When optimizing Minimum Detectable Effect (MDE) sizing for Sydney ecommerce stores, several key factors come into play that can significantly impact the accuracy and reliability of your A/B testing results. First and foremost, the baseline conversion rate of your store is crucial; stores with higher baseline rates typically require smaller sample sizes to detect meaningful changes, whereas stores with lower conversion rates need larger samples to achieve statistical significance. Additionally, the expected magnitude of change you wish to detect—the MDE itself—directly influences sample size: the smaller the effect you want to identify, the larger your sample size must be. Traffic volume is another critical consideration; Sydney ecommerce stores with limited daily visitors will experience longer test durations if large sample sizes are required, which can delay actionable insights. Moreover, variability in customer behavior, seasonality, and marketing campaigns unique to the Sydney market can affect conversion fluctuations, thereby impacting the statistical power of your tests. Finally, the chosen confidence level and statistical power thresholds also shape MDE sizing decisions, balancing the risk of false positives against the need for timely results. By carefully evaluating these factors, Sydney ecommerce businesses can tailor their MDE sizing strategies to optimize test efficiency and confidently drive data-informed decisions.

3. Determining Optimal Test Duration for Reliable Results

Determining the optimal test duration is a critical step in ensuring that your A/B tests deliver reliable and actionable insights for your Sydney ecommerce store. Running a test for too short a period can lead to inconclusive results, while excessively long tests may delay decision-making and slow down your optimization efforts. To strike the right balance, it’s essential to consider factors such as your store’s traffic volume, conversion rates, and the Minimum Detectable Effect (MDE) you aim to identify.

Higher traffic stores can reach statistically significant results faster, allowing for shorter test durations without compromising reliability. Conversely, stores with lower traffic may need to extend their test periods to gather sufficient data. Additionally, the smaller the MDE you want to detect (i.e., subtle changes in performance), the longer your test should run to confidently measure that difference.

Leveraging data-driven tools and statistical calculators can help you estimate the appropriate test duration based on your specific parameters. Moreover, monitoring your test’s progress and ensuring key metrics stabilize over time is vital before making decisions based on the results. By carefully determining the optimal test duration, Sydney ecommerce businesses can maximize the effectiveness of their experimentation, leading to smarter strategies and improved conversion rates.

4. Leveraging Data to Balance Test Efficiency and Accuracy

In the competitive landscape of Sydney’s ecommerce market, striking the right balance between test efficiency and accuracy is crucial for making informed business decisions. Leveraging data effectively enables store owners to optimize Minimum Detectable Effect (MDE) sizing and test duration, ensuring that A/B tests deliver meaningful insights without unnecessary delays. By analyzing historical performance metrics, conversion rates, and traffic patterns, you can tailor your MDE to detect impactful changes that truly matter to your bottom line. Additionally, utilizing predictive analytics helps estimate the ideal test duration—long enough to gather statistically significant results, but short enough to implement improvements swiftly. This data-driven approach not only maximizes resource utilization but also minimizes the risk of false positives or inconclusive outcomes. Ultimately, by harnessing your ecommerce store’s unique data, you can design experiments that are both efficient and reliable, driving smarter optimization strategies and sustained growth in Sydney’s dynamic online marketplace.

5. Practical Tips for Implementing MDE and Test Duration Strategies

When it comes to optimizing Minimum Detectable Effect (MDE) sizing and test duration for your Sydney ecommerce store, practical implementation is key to obtaining reliable, actionable insights without wasting time or resources. First, start by clearly defining your business goals and the specific metrics you aim to improve—whether it’s conversion rate, average order value, or customer retention. This clarity will help you set a realistic MDE that balances sensitivity with practicality; aiming for too small an effect can lead to unnecessarily long tests, while too large an MDE risks missing meaningful improvements.

Next, leverage historical data from your store to estimate baseline conversion rates and variability, which are crucial inputs for calculating sample size and test duration. Utilizing statistical calculators or A/B testing platforms with built-in sample size estimation can simplify this process. Remember to factor in traffic volume and average order frequency unique to your ecommerce site, as these influence how quickly your test will reach statistical significance.

During the testing phase, maintain consistency by running tests for a full business cycle—usually a minimum of one to two weeks—to account for day-of-week and seasonal fluctuations in shopper behavior. Avoid stopping tests early, even if preliminary results seem promising, as premature conclusions can lead to false positives or negatives.

Finally, document all test parameters, results, and learnings to build a knowledge base for future experiments. Iteratively refine your MDE and test duration strategies based on past outcomes and evolving business priorities. By combining data-driven planning with disciplined execution, Sydney ecommerce stores can optimize their testing efforts, accelerating growth while minimizing risk.

6. Case Studies: Successful Optimization in Sydney Ecommerce Businesses

To illustrate the impact of effectively optimizing Minimum Detectable Effect (MDE) sizing and test duration, let’s explore some real-world examples from Sydney-based ecommerce stores that have harnessed data-driven strategies to boost their conversion rates and overall performance.

1. Urban Outfitters Sydney – Reducing Test Duration without Sacrificing Accuracy

Urban Outfitters Sydney faced the challenge of minimizing the time their A/B tests took while maintaining statistical confidence. By recalibrating their MDE to align closely with their business goals and leveraging historical conversion data, they shortened their average test duration by 30%. This acceleration allowed the marketing team to implement winning variations faster, resulting in a 12% uplift in sales over six months.

2. GreenLeaf Organics – Balancing MDE and Sample Size for Cost Efficiency

GreenLeaf Organics, a niche retailer of organic products, was constrained by a limited web traffic volume. By optimizing MDE sizing to detect meaningful yet realistic improvements, they reduced the required sample size for their tests. This strategic adjustment enabled them to run more frequent experiments without overextending resources. The outcome was a 15% increase in email sign-ups and a 10% boost in average order value within three months.

3. TechGear Australia – Leveraging Data-Driven Test Planning to Drive Revenue Growth

TechGear Australia implemented a rigorous data-driven framework for determining MDE and test duration, factoring in their average order value, traffic fluctuations, and seasonal trends. By tailoring their experiments accordingly, they avoided prolonged tests that often skewed results. This optimized approach led to a 20% increase in checkout completion rates and improved customer retention metrics.

These case studies demonstrate that Sydney ecommerce businesses can gain a competitive edge by fine-tuning their experimentation strategies through careful optimization of MDE sizing and test duration. Embracing a data-driven mindset not only improves the reliability of test results but also accelerates decision-making, fueling sustainable growth in a dynamic market.

7. Common Pitfalls and Misconceptions in MDE and Test Duration

When it comes to optimizing Minimum Detectable Effect (MDE) sizing and test duration, many Sydney ecommerce stores encounter common pitfalls and misconceptions that can hinder the effectiveness of their experiments. One frequent mistake is setting an unrealistically small MDE, which often leads to excessively long test durations and delayed decision-making. While a smaller MDE might seem appealing for detecting subtle changes, it can drain resources and stall optimization efforts without providing timely insights. Conversely, some businesses assume that shorter tests automatically yield faster results, neglecting the statistical power needed to draw reliable conclusions. This trade-off between test duration and confidence levels is crucial; running tests too briefly increases the risk of false positives or negatives, ultimately leading to misguided business decisions. Another misconception is overlooking the importance of traffic quality and segmentation. Failing to account for customer behavior differences across segments can mask true effects and skew results. Additionally, many stores underestimate the impact of external factors such as seasonality, promotions, or website updates, which can introduce noise and confound test outcomes if not properly controlled. Understanding and avoiding these common pitfalls is essential for Sydney ecommerce businesses aiming to leverage data-driven experimentation effectively, ensuring their MDE sizing and test durations align with realistic goals and deliver actionable insights.

8. Tools and Frameworks for Accurate MDE Calculations

When it comes to optimizing Minimum Detectable Effect (MDE) sizing and test duration, utilizing the right tools and frameworks is essential for Sydney ecommerce stores aiming to make data-driven decisions with confidence. Accurate MDE calculations help ensure that A/B tests are neither underpowered nor unnecessarily prolonged, saving both time and resources. Popular statistical tools like R and Python offer powerful libraries—such as `statsmodels` and `scipy`—that allow for precise power analysis and sample size estimation based on your specific metrics and desired confidence levels. Additionally, dedicated experimentation platforms like Optimizely, VWO, and Google Optimize often come with built-in calculators that simplify MDE estimations, integrating seamlessly with your ecommerce data. For more tailored needs, frameworks like Bayesian A/B testing provide an alternative approach, enabling more flexible and informative insights during the experimentation process. Leveraging these tools not only streamlines your testing strategy but also empowers Sydney ecommerce businesses to optimize conversion rates and customer experiences with scientifically backed rigor.

If you found this article helpful and need help with your website conversion, contact us for a FREE CRO Audit